YouTube Is Requiring “Realistic” AI-Generated Videos To Be Labeled

Attempting to avoid misleading viewers with synthetic or altered content.

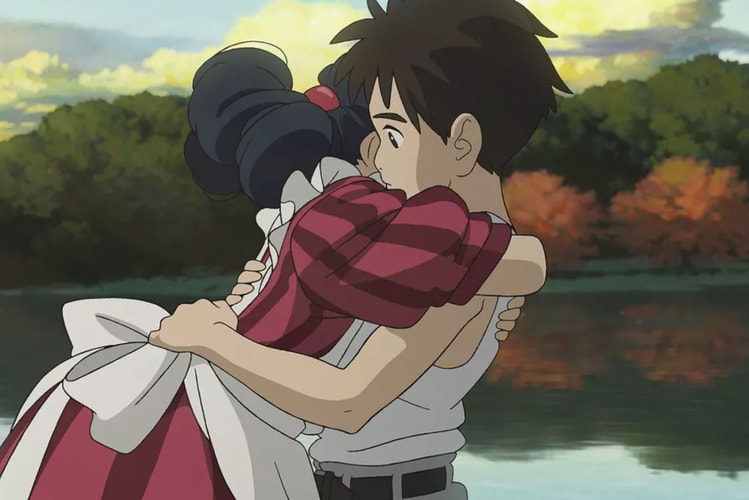

As the capabilities of generative AI rapidly advance, YouTube is beefing up its own precautions. Primarily, the platform is concerned with users mistaking AI-generated content for the real deal.

In November, YouTube shared that it would soon be introducing some sort of disclosure system for AI content that appears to be realistic. The platform is referring to such content as “synthetic” and “altered material,” which includes real footage that has been tweaked using AI.

Months later, the streamer has rolled out a new tool in its Creator Studio that lets users add a label that deems content as created using AI. The feature appears as a simple yes or no checkbox under a sub-head reading “Altered content.”

It seems that AI videos that are clearly generated won’t require labels and YouTube is seemingly counting on creators using their own discretion when applying the label.

For instance, the platform won’t require users to flag AI-generated content that was merely used to help produce a video, such as generating a script or caption, so the feature moreso applies to the actual visuals being shown within content.

YouTube also said that it “won’t require creators to disclose when synthetic media is unrealistic and/or the changes are inconsequential.”